Understanding AI Basics

Artificial Intelligence does have a role outside of science fiction. In fact, maybe it has (or should have) a role in your business. Before you start preparing for the robot takeover, get to know the basics. They may surprise you.

What Doesn’t Exist

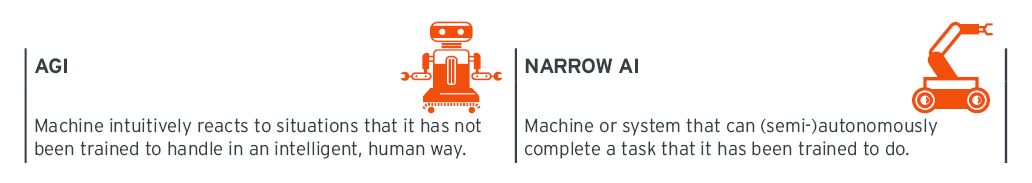

Science fiction may have made you more familiar with Artificial General Intelligence (AGI), which is different than the “AI” that is being implemented in business use cases today. Artificial General Intelligence is the ability of a machine to intuitively react to situations that it has not been trained to handle in an intelligent, human way. An example of AGI could be of a system that experiments with its surroundings to gain new insight and apply it correctly to a completely different situation.

You should know that this does not exist yet. In fact, there will probably have to be a huge change to the way AI is currently designed before we reach a human-like general intelligence for machines.

What Does Exist

Today, we work with Narrow AI.

The name sums it up; instead of being intelligent enough to figure out what to do in any situation, Narrow AI is a machine or system that can (semi-)autonomously complete a task that it has been trained to do.

And only that.

It can continue to learn and get better at doing that one task it has been trained to do, but it isn’t going to go rogue and seduce your husband or wife. Read this blog for more information on the differences between Narrow AI and AGI. Narrow AI (usually just called AI) can be focused on many different types of tasks.

The common ones include recommendation engines, anomaly detection, image classification, natural language processing (NLP) and much more. And actually, AI is an umbrella term that includes many different disciplines and technologies, including NLP, Machine Learning (ML), Machine Reasoning, Robotics, and Computer Vision.

Each one of these disciplines is its own research field, but they are often brought together to work in concert. If you’re starting to wonder why all of these very different technologies are referred to as AI, you’re not alone. AI is a far too ambiguous term, and we’ll get into that while debunking some of the myths around AI.

Myth-Busting AI

There are lots of solutions used in businesses or products that “have AI”, “are AI”, or are “AI-powered”. There is a lot that you have to unpack from those short statements. Remember, “AI” could be anything from “composing” music to hospitality robots. A good place to start in understanding AI is looking at some of the common myths that have arisen around AI technology.

Anything Is Possible with AI

A side effect of being able to call nearly everything AI is that it gives the impression that AI can do anything. Take for example those humanoid robots that can see you, listen to you, and think of things to say all on their own. Narrow AI functionalities can be layered one on top of the other to create the appearance of “intelligence”. Combining robotics, image classification, natural language understanding and generation, and computer vision, for example, will produce something that sees, hears, and responds to you.

But the basic difference between Artificial General Intelligence and systems like these is that the vision, hearing, and generating language components are doing only what they have been taught and what they are learning within each domain. This is very impressive, but there are still many limitations to what AI can accomplish. John McCarthy, a major influence in computer science, described the phenomenon very well.

And this is true.

As any AI capability becomes more mature, it more often referred to by a more specific name, and loses the AI mysticism. “Image classification” does not sound nearly as impressive as AI, but there you go.

AI Is Going to Make Humans Redundant

There is a lot of buzz about the new levels of efficiency and cost savings that AI will bring to the enterprise itself. This also stirs up fears that AI will replace humans in the workplace, and that large numbers of people will be without jobs because a system will be able to do it faster and more reliably.

There is a grain of truth to this, in that people’s jobs will change because of the development of AI. What is more likely, is that certain tasks will be supported by AI systems, automating the redundant aspects and delivering predictive analysis on those tasks to enable the people in those jobs to make better decisions.

But making a compassionate transition to using AI to support your employees and operations should be considered critically before adopting any AI technology, especially to ensure your employees are trained for any new skills they may need and that they understand how best to use a new AI tool. This decision has to be divorced from the hype, and based on concrete business benefits that consider any human cost.

Regulating AI Is Impossible

Although there is very little concrete regulation for AI development and use, there are several regulating bodies that are tackling this challenge. Additionally, there are multiple frameworks that are publicly available to help govern the use and development of AI. Even though developing regulation is a notoriously slow process, there is measurable progress on the AI topic. On October 20th 2020, the European Parliament voted on and accepted recommendations on what should be addressed in a future regulation.

Top 10 Real-World Artificial Intelligence Applications

AI is out there in the real world making large business impacts. Here are the top 10 applications that you should know about.

Top 10 Applications

- Image classification: Image classification is a classic example, and one that is being applied in nearly every industry. The model learns to categorize an image based on what it is, or what it is not. Systems can then recognize a picture of a dog, human, car, or anything that it is specifically trained to find.

- Recommendation Engines: Recommendations are already familiar territory in delivering unique customer experiences. Recommendations are generated from variables such as a user’s history of behavior and the behavior of users who exhibit similar behavior.

- Anomaly Detection: This is popular in cybersecurity, but is applicable across many industries. A system monitors a repetitive action or behavior, and notifies a human counterpart if unfamiliar, questionable, or malicious behavior is detected.

- Chatbots: These are one of the most visible AI applications, used for customer service, internal communications, human resources, and many other use cases. They are one of the more mature applications on the market, and are getting better every year.

- Conversational search functions: One of the spin-off improvements of chatbots is a conversational search function. Here, the system must be able to respond to a question asked in a natural, human way. This means being able to read rambling questions that may not include the right buzzwords. These are being used for self-service diagnostic chatbots, where a user can describe their symptoms in their own words, which will likely not be the medical terminology that a doctor would use. The system could then provide a possible diagnosis and schedule an appointment with a specialist.

- Summarized insights: Natural Language Processing (NLP) can enable machines to read and summarize text, which is a huge timesaver in many research-intensive industries. The legal industry, for example, deals with huge volumes of complex documents, which can be quickly summarized by a system that has been trained with knowledge graphs specific to the legal domain.

- Data preparation: Collections of classification, recommendation, and anomaly detection capabilities can be packaged as data preparation tools that assist in checking the quality of data, domain/entity discovery, data profiling, and conducting data similarity checks.

- Business Intelligence: AI for each of the above applications can be combined for specific solutions, like BI applications. AI to support each of the individual steps in data preparation and management, as well as generating insights, forecasts, and recommendations is quickly becoming standard in BI solutions.

- Predictive Maintenance: Using AI to manage IoT systems and machinery can be used to detect equipment wear or malfunction, and schedule maintenance ahead of breakdowns to reduce unplanned downtime.

- Identity verification: AI for facial matching is becoming standard in identity verification solutions, enabling KYC (know your customer) processes to be done remotely. Facial recognition must be used responsibly, and many companies are clearly stating how they use facial recognition technologies so as not to infringe on the privacy rights of users.

The Impact of AI on Cybersecurity

Cyber threats are a constant challenge, and unfortunately, they continue to evolve.

Fighting a moving target is never easy, and using AI for cybersecurity can give an advantage.

AI Joins the Team

Most cybersecurity products collect huge amounts of data, which can swamp the human security analyst.

Their time is better used to address active threats, not trudge through endless data.

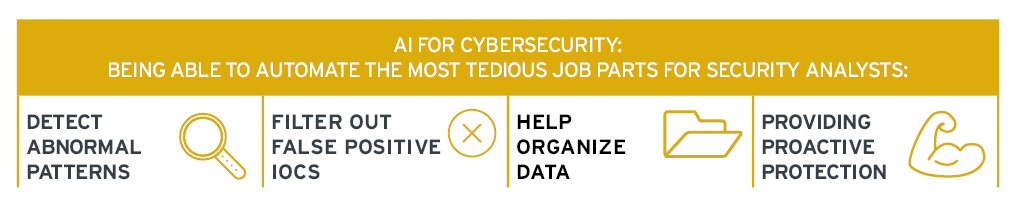

For security analysts, AI for cybersecurity means being able to automate the most tedious parts of their job, augmenting their ability to do the more creative and productive tasks.

AI can be used to detect abnormal patterns and filter out false positive Indicators of Compromise (IOCs).

AI can also be used to help organize data before it comes to the security analyst, especially to deal with unstructured data or data in real time.

This can make SIEM results more useful by ranking alerts by risk scores or providing mitigation recommendations.

Cognitive technologies can assist in providing proactive protection against phishing attacks or generating intelligence reports from unstructured data like formal research or dark web communications.

The Role of Explainability in AI

If a model cannot “explain” itself, there is no way for humans impacted by its decision to know the quality of the decision, or if there were unseen biases that influenced the decision.

Explainability plays a critical role in the advancement of AI.

More Than a Buzz Word

“Explainability” serves many purposes for AI. It is a form of accountability, of security, and communication. The risk of more complex machine learning and neural networks is that they become too abstract, dealing with thousands of features to yield a recommendation.

These models are often called “black box” models, because all the user sees is the input data and a recommendation with no insight into why the recommendation is what it is.

Explainability can be “added” to models that are not inherently interpretable, and are most developed for classification models. For example, a classification model that is trained to identify trees in images, labels an image of a bush “not a tree”. To explain why the model reached that decision, a mathematical technique like LIME can be applied that highlights the pixels that are most relevant to the decision, creating a “heat map” that visually represents what areas of the picture were most influential to the model when it made its decision.

Explainability for different types of models are at varying stages of maturity, with development coming from both academia and the private sector. Developing better explainability for machine learning models is a must for the AI industry.

Explainability is a key step towards accountability, governance, and communication with end users as well as business users.

SWOTing AI Security: Strengths, Weaknesses, Opportunities, and Threats

Consider AI from all sides, including how the advantages can also become disadvantages and vice versa.

Here is the quick and dirty SWOT for AI.

Strengths

- Huge potential to handle massive volumes of data to yield actionable insights, recommendations, and forecasts

- Save time for human employees by completing repetitive aspects of their work

- Potential to create new revenue-generating products

Weaknesses

- Developing field with a very high profile and healthy skepticism from the general public

- Gaps in capabilities, including providing transparent explanations for all types of models

- Is a challenge to adequately govern the use of data in model training and operations

Opportunities

- Application within the organization in many ways including cybersecurity, business intelligence

- Application across all sectors including financial, utilities and energy, healthcare, automotive, logistics and supply chain.

- Benefits from cloud adoption and vast amounts of data produced and consumed by businesses

Threats

- Lack of transparency and accountability of proprietary models

- Questionable ability of models to serve all populations equally and with ethical behavior

- Fears (unfounded or not) of job displacement, rogue AI, and use for surveillance and social control may discourage organizations from implementing AI.

Who Is Bringing AI to Enterprises Today?

There are countless AI companies out there now, some specializing in a certain discipline of AI like NLP or computer vision, but there are also a cluster of vendors that are delivering AI Service Clouds specifically for the enterprise.

Vendors to Know About

AI is definitely not new, with origins in the 1950s.

But AI was launched back into the spotlight with advancements in cloud computing, which made the computations behind AI much more feasible for enterprise use.

Despite the abundance of computing power, there is a significant knowledge gap and lack of AI and machine learning experts.

This means that a market to deliver AI Service Clouds – “build-it yourself” toolkits that make AI more accessible to different business users – has opened up.

The vendors that deliver these types of tools include AWS, Cognino, Google, IBM, Kortical, Microsoft, Salesforce, and many others. There are countless other vendors of course that deliver specific AI solutions.

Where AI Is Supporting Identity & Access Management

AI can support the work of many different fields, IAM included.

The strengths that AI has in ingesting large volumes of data and detecting patterns is particularly useful for IAM tasks.

Support for Repetitive Tasks

AI is able to support IAM by harnessing its ability to process large volumes of data and yield an actionable insight.

AI can be particularly helpful in automating repetitive processes and in detecting anomalies, which can be helpful to deliver adaptive authentication products, identity governance and administration (IGA) solutions, or privileged access management (PAM) solutions.

AI is also often used in facial recognition and matching tasks which are used in identity verification solutions, in supporting digital identity schemes, and as part of biometric authentication.

Curse or Blessing – Brave New World of Artificial Intelligence

Like with any new development, there are positive and negative aspects to AI.

But the development still marches on. There are a few things to consider about the role AI will play in the digitally transformed organization.

Digital Transformation and Beyond

Artificial Intelligence is seen as a key enabler of digital transformation – of the business, of societal structures such as cities, and of entire economies.

Its ability to transform large volumes of data into actionable insights, recommendations, or forecasts for nearly countless applications means that it can be a powerhouse for change.

Among its benefits in the enterprise are cost saving, the ability to redesign and automate processes, and the ability to generate data-driven insights quickly without specialized knowledge in data science, and of course the wide range of AI-supported products and services.

High-level support for innovation in this space exists to encourage digital transformation for companies, cities, and countries.

Calls for caution and regulation also come from these high-level bodies, producing uncertainty and stalling AI development.

These calls are justified, but must be accompanied by clear and reasonable regulation so that enterprises may proceed with experimenting with and implementing artificial intelligence applications.